Reputation and trust in models

The importance of reputation and trust is out of question in both human and virtual societies. The sociologist Luhmann wrote: ”Trust and trustworthiness are necessary in our everyday life. It is part of the glue that holds our society together”. Luhmann’s observation was also contrasted in virtual societies. The proliferation of electronic commerce sites started the need for mechanisms that ensure and enforce normative behaviors and at the same time, increase electronic transactions by promoting potential users’ trust towards the system and the business agencies (agents) that operate in the site.

Along with it, reputation arises as a key component of trust, becoming an implicit social control artifact. Every society has its own rules and norms that members should follow to achieve a well-fare society. The social control that reputation generates emerges implicitly in the society, since non-normative behaviors will tend to generate bad reputation that agents will take into account when selecting their partners, and therefore it can cause exclusion due to social rejection.

One of fields that most is using these concepts is the field of multi-agent systems (MAS). These systems are traditionally composed of discrete units called agents that are autonomous and that need to interact to each other to achieve their goals. The parallelism with human societies is obvious, and also the problems, specially when we are talking about open MAS. The main feature that characterizes open multi-agent systems is that the intentions of the agents are unknown. Hence, due to the uncertainty of their potential behavior we need mechanisms to control the interactions among the agents, and protect good agents from fraudulent entities.

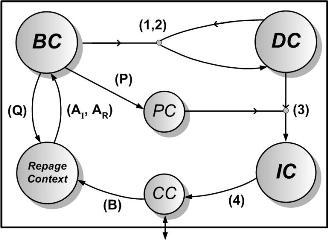

Figure 1. The Repage architecture

Computational trust and reputation models have been recognized as one of the key technologies required to design and implement agent systems. These models manage and aggregate the information needed by agents to efficiently perform partner selection in uncertain situations. For simple applications, a game theoretical approach similar to that used in most models can suffice. However, if we want to undertake problems found in socially complex virtual societies, we need more sophisticated trust and reputation systems. In this context, reputation-based decisions that agents make take on special relevance and can be as important as the reputation model itself.

In this paper, we propose a possible integration of a cognitive model of reputation, called RepAge into a BDI cognitive agent. A BDI agent is an agent uses beliefs, desires and intentions to reason and make decisions.

First, we specify a beliefs logic able to capture the semantics of the cognitive model of reputation, RepAge (ie, a logic with expressive enough to represent the concepts that this model incorporates). From a technical standpoint, the logic we use is defined by a two-level hierarchy of first order languages, allowing the specification of axioms as first order theories.

The defined logic allow us to express in terms of beliefs the two main concepts of model RepAge: image and reputation. Thus these concepts unrelated to the reasoning mechanism of the BDI agent, who understands just about beliefs desires and intentions, can be incorporated into the reasoning process of the BDI agent naturally in the form of beliefs. In our case, we specify and implement a BDI agent that incorporates RepAge and this logic using a formalism called multi-context specification that allows a very clean specification and close to implementation.

References

I. Pinyol, J. Sabater-Mir, P. Dellunde and M. Paolucci. Reputation-based decisions for logic-based cognitive agents. Autonomous Agents and Multi-Agent Systems 24(1): 175-216 (2012).